Earlier this year, Microsoft added a new key to Windows keyboards for the first time since 1994. Before the news dropped, your mind might’ve raced with the possibilities and potential usefulness of a new addition. However, the button ended up being a Copilot launcher button that doesn’t even work in an innovative way.

Logitech announced a new mouse last week. I was disappointed to learn that the most distinct feature of the Logitech Signature AI Edition M750 is a button located south of the scroll wheel. This button is preprogrammed to launch the ChatGPT prompt builder, which Logitech recently added to its peripherals configuration app Options+.

Similarly to Logitech, Nothing is trying to give its customers access to ChatGPT quickly. In this case, access occurs by pinching the device. This month, Nothing announced that it “integrated Nothing earbuds and Nothing OS with ChatGPT to offer users instant access to knowledge directly from the devices they use most, earbuds and smartphones.”

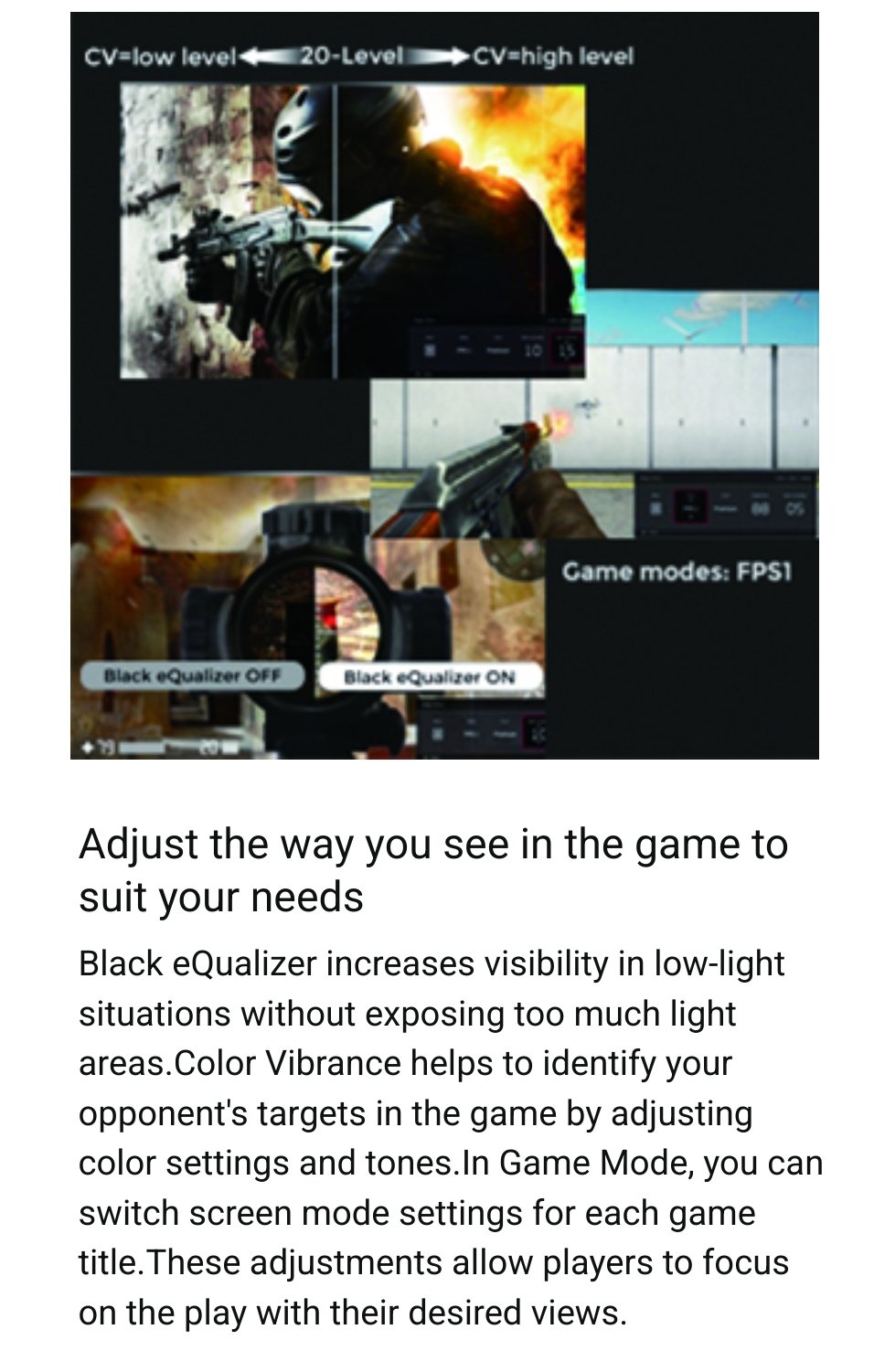

In the gaming world, for example, MSI announced this year a monitor with a built-in NPU and the ability to quickly show League of Legends players when an enemy from outside of their field of view is arriving.

Another example is AI Shark’s vague claims. This year, it announced technology that brands could license in order to make an “AI keyboard,” “AI mouse,” “AI game controller” or “AI headphones.” The products claim to use some unspecified AI tech to learn gaming patterns and adjust accordingly.

Despite my pessimism about the droves of AI marketing hype, if not AI washing, likely to barrage the next couple of years of tech announcements, I have hope that consumer interest and common sense will yield skepticism that stops some of the worst so-called AI gadgets from getting popular or misleading people.

Like when tech companies forced “Cloud” everything upon us in the early 2010’s, and then the digital home assistant craze that followed after.

These things are not meant for us. Sure some people will enjoy or benefit from them in some way, but their primary function is to appease shareholders and investors and drum up cash. It’s why seemingly every company is desperately looking for ways to shoehorn “AI” into their products even if it’s completely nonsensical.

I disagree. I believe that our interactions with AI represent a breakthrough in the level of data surveillance capitalism can obtain from us.

AI isn’t the product. The users are. AI just makes the data that users provide more valuable. Soon enough, every user will be discussing their most personal thoughts and feelings with big brother.

Data collection is theft. Every one of us is being robbed at least $50 per year. That’s how Facebook and Google are worth billions.

They forced cloud on us so they could do the same nickel-and-dime billing that webhosts used for cpu cycles/ram/storage…

…because it’s lucrative as hell when taken to a grand scale.

But there are sometimes side benefits for us.

I, for one, am over the moon levels of happy that I will never spend another weekend patching Exchange servers.

Can I get the list of things that are in my smartphone because we asked for them?

This article should have been titled, “Why the fuck does my mouse need an AI chat -prompt builder?”

Seriously. I want my mouse to do one job - move around the screen and let me click on stuff.

…and if I want “instant access to knowledge”, a Wikipedia bookmark will do the job.

But what if that bookmark had AI? 🤯

In the gaming world, for example, MSI announced this year a monitor with a built-in NPU and the ability to quickly show League of Legends players when an enemy from outside of their field of view is arriving.

…So it just lets them cheat? I remember when monitor overlay crosshairs were controversial, this is insane to me.

Yep, there are cheating monitors too and you will never know if other people have it:

Holy shit, Star Trek knew. They were trying to warm us.

The problem is that “AI” doesn’t really entail any concrete technical capabilities, so if the term is seen in a positive light, people will abuse the limits of the definition as far as they are able.

Not really a new phenomenon. Been done in the past as well, with “AI” as well as other things.

With “self-driving cars” being vague – someone could advertise a car that could park itself “self driving” – we introduced newer terms that were linked to actual characteristics, like the SAE level system for vehicle autonomy.

Might need to do something similar with AI.

Spoiler alert: the rice cooking function was analog the whole time.

I think the Sunbeam Radiant Toaster from 1949 had similar AI. Perfect toast every time! Never burnt!

Keylogger=bad unless it came decipher what you’re doing so Costco can order more diapers or more vegan snacks.

Can’t wait until the AI enhanced sex toys.

Lol why wait, Lovense is already touting AI driven patterns.

Wait, so we should poison AI data to force AI sex toys to only allow edging?

What are they using as input? Like, you can have software that can control a set of outputs learn what output combinations are good at producing an input.

But you gotta have an input, and looking at their products, I don’t see sensors.

I guess they have smartphone integration, and that’s got sensors, so if they can figure out a way to get useful data on what’s arousing somehow from that, that’d work.

googles

https://techcrunch.com/2023/07/05/lovense-chatgpt-pleasure-companion/?guccounter=1

Launched in beta in the company’s remote control app, the “Advanced Lovense ChatGPT Pleasure Companion” invites you to indulge in juicy and erotic stories that the Companion creates based on your selected topic. Lovers of spicy fan fiction never had it this good, is all I’m saying. Once you’ve picked your topics, the Companion will even voice the story and control your Lovense toy while reading it to you. Probably not entirely what those 1990s marketers had in mind when they coined the word “multimedia,” but we’ll roll with it.

Riding off into the sunset in a galaxy far, far away? It’s got you (un)covered. A sultry Wild West drama featuring six muppets and a tap-dancing octopus? No problem, partner. Finally want to dip into that all-out orgy fantasy you have where you turn into a gingerbread man, and you’re leaning into the crisply baked gingerbread village? Probably . . . we didn’t try. But that’s part of the fun with generative AI: If you can think it, you can experience it.

Of course, all of this is a way for Lovense to sell more of its remote controllable toys. “The higher the intensity of the story, the stronger and faster the toy’s reaction will be,” the company promises.

Hmm.

Okay, so the erotica text generation stuff is legitimately machine learning, but that’s not directly linked to their stuff.

Ditto for LLM-based speech synth, if that’s what they’re doing to generate the voice.

It looks like they’ve got some sort of text classifier to estimate the intensity, how erotic a given passage in the text is, then they just scale up the intensity of the device their software is controlling based on it.

The bit about trying to quantify emotional content of text isn’t new – sentiment analysis is a thing – but I assume that they’re using some existing system to do that, that they aren’t able themselves to train the system further based on how people react to their specific system.

I’m guessing that this is gluing together existing systems that have used machine learning, rather than themselves doing learning. Like, they aren’t learning what the relationship is between the settings on their device in a given situation and human arousal. They’re assuming a simple “people want higher device intensity at more intense portions of the text” relationship, and then using existing systems that were trained as an input.

Lovense is basically just making a line go up and down to raise and lower vibration intensities with AI. They have tons of user generated patterns and probably have some tracking of what people are using through other parts of their app. It’s really not that complicated of an application.