I must confess to getting a little sick of seeing the endless stream of articles about this (along with the season finale of Succession and the debt ceiling), but what do you folks think? Is this something we should all be worrying about, or is it overblown?

EDIT: have a look at this: https://beehaw.org/post/422907

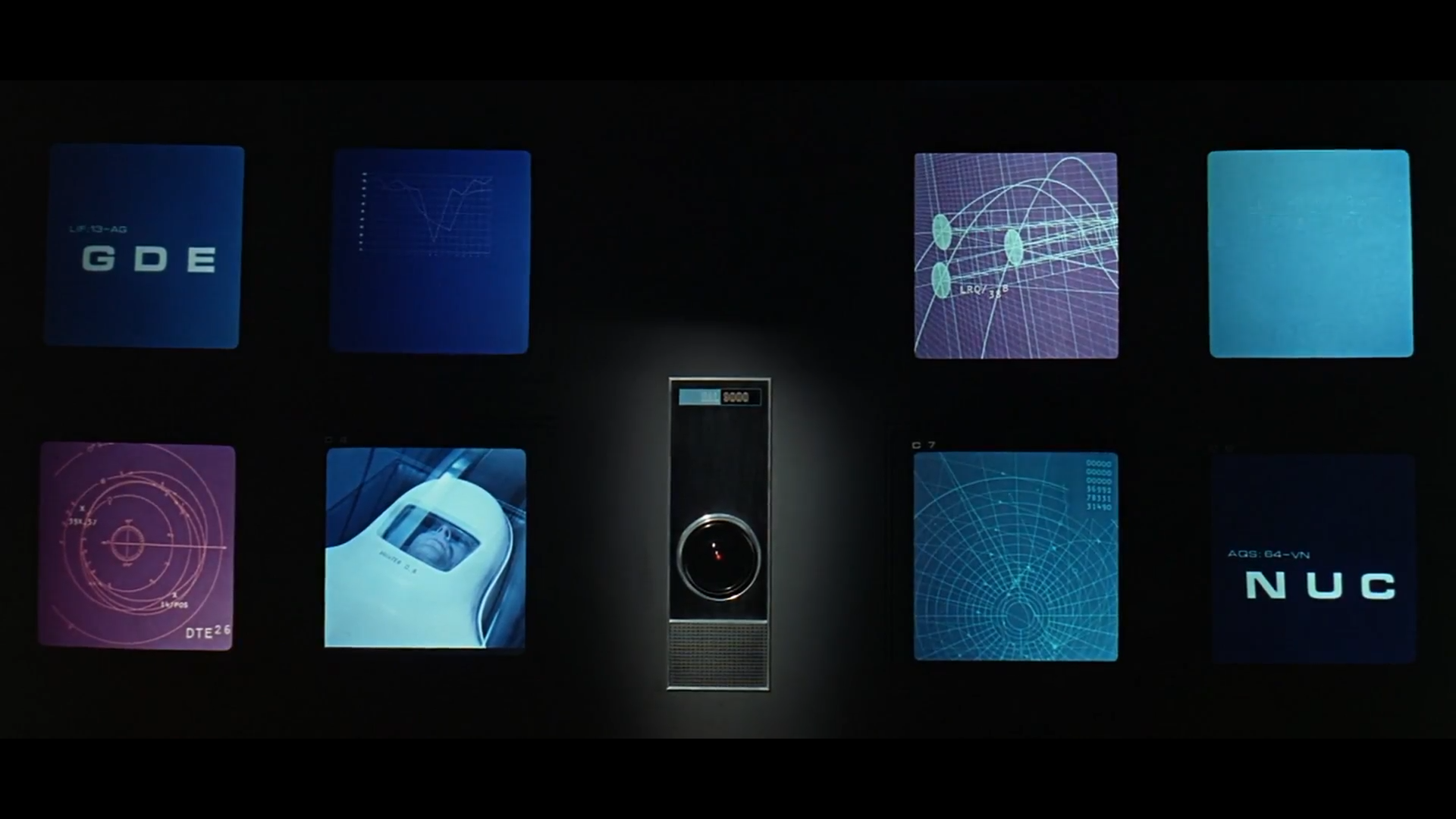

This video by supermarket-brand Thor should give you a much more grounded perspective. No, the chances of any of the LLMs turning into Skynet are astronomically low, probably zero. The AIs are not gonna lead us into a robot apocalypse. So, what are the REAL dangers here?

So no, we’re not gonna see Endos crushing skulls, but if measures aren’t taken we’re gonna see inequality go way, WAAAY worse very quickly all around the world.

Yep. I’m already seeing AI start to displace some jobs. And what we’re seeing right now is the “baby” form of AI. What will it look like in 5 years? 10? 20?

But really AI is just part of the puzzle.

Everyone talks about Tesla as it is now and how bad their self-driving is - without considering that one day it will get better. And it’s not just Tesla; it’s places like Waymo too. Let’s not forget that Tesla now sells semi trucks, and there’s no reason why the tech won’t apply for them as well. One day - not today, but maybe in a decade - self-driving will be the norm. And that kills off Uber, taxis, semi-truck drivers, and anyone else who drives for a living.

And that applies to other delivery methods too. Right now, Domino’s has a pizza-delivery robot. At scale, those can replace DoorDash, Amazon, and even the USPS. Any job which is “move a thing from one place to another” is at risk within a decade. Even things which don’t exist now - like automated garbage trucks - will one day soon exist. Like, within our lifetime.

It doesn’t stop there, either. Amazon has a store without cashiers. Wal-Mart has robots which restock shelves. That’s a good chunk of stores now completely automated. If you’re a stocker or a cashier, your job is on the chopping block too.

Japan has automated hotels. You don’t need to interact with a human, at all. There are also robot chefs flipping hamburgers. And I’m sure you’ve seen the self-order kiosks at McDonald’s. Between all that - that’s the entire service industry automated.

Did I mention that ChatGPT can write code? It’s not good code, but it’s code. When given enough time - tech will replace a good chunk of programmers, too. Do you primarily use Excel in your job? This same AI can replace you, too.

AI is coming for all kinds of jobs. Construction workers are even at risk now, for example. And even if the AI isn’t good - one day it will be.

Just like how computers in the 1970s weren’t good. But they are now.

It will happen. You can’t stop it. CGP Grey did a great video on this a while back.

His analogy was basically: just because the stagecoach was good for horses doesn’t mean that when the car was invented there were even more horse jobs created. You can’t presume that new technology will always create jobs; at some point it’s going to cause a net decline.

And what happens when entire industries disappear overnight? What will happen to college students who now can’t get a simple customer service job to put them through college? What happens to entry-level jobs?

Like I said. The genie is out of the bottle.

Now. It’s in the capitalist’s best interest to have money entering the workforce. If the workforce doesn’t have money, they don’t spend that money, and the capitalist doesn’t get more money.

It’s in the politician’s best interest to keep the masses happy. They are what decide elections, and automation isn’t going to stop elections from happening.

Because of that, there are 3 ways things can go down:

A complete ban on AI capable of a certain level of automation. I think this is unlikely but conceivable. I expect conservative parties to start championing this in 10-20 years.

A universal basic income/expanded social safety net. Notably this is what Andrew Yang was talking about in the US 2020 primaries, and - whether you like Yang or not - it’s something that has gained traction.

Fully automated luxury gay space communism. I find this the most unlikely option, but if the politicians/capitalists for whatever reason decide to ignore the fact that 3/4 of the workforce doesn’t have a job… well, something’s gotta give. But like I said - I don’t think this will actually happen, or even come close to happening.

I expect that politicians will be reasonable and nip this in the bud with something like UBI. The reaction will be similar to what happened during the pandemic - nobody has a job and nobody can work, but the economy needs to go on. So the government gives people a stipend to go spend on stuff to keep the economy going.

But honestly… who knows? It could really go either way.

Kyle Hill looks like if Thor and Aquaman had a baby. A very nerdy baby.

Hi @jherazob@beehaw.org, finally got around to watching the video, thanks for letting me know about it.👍 One thing that really befuddles me about AI is the fact that we don’t know how it gets from point A to point Z as Mr. Dudeguy mentioned in the video. Why on earth would anyone design something that way? And why can’t you just ask it, “ChatGPT, how did you reach that conclusion about X?” (Possibly a very dumb question, but anyway there it is 🤷).

We have designed it that way because it works better than anything else we’ve ever had, the kind of stuff you can achieve with these deep neural networks is astounding, but also stupidly limited. As to why you can’t ask ChatGPT: Because it doesn’t know. It doesn’t know ANYTHING. As mentioned in the comment, all it knows is how to sound right, it’s a language model, all it knows it’s language. It knows nothing about the internal workings of AI, because it knows nothing besides language.